“A comprehensive, contemporary, and research-supported treatment of online learning. Engaging with this work can encourage conversation, inspire reflection, and improve practice for anyone involved in online teaching and learning,” says Executive Director and CEO of Quality Matters Deborah Adair, Ph.D. in her review of Fielding’s “Handbook of Online Learning in Higher Education”. Released in 2021, during the COVID-19 pandemic, it’s no surprise that the “Handbook for Online Learning” has become a go-to source for universities and educators challenged to pivot and build online learning programs and curricula from scratch. For others in higher education who have embraced the new opportunities that online learning offers, the “Handbook” has also been a trusted guide.

Alma Boutin-Martinez, Ph.D.

There is plenty to unpack in this more than 600-page reference book. In occasional conversations, we’ll chat with some of the authors and higher education experts about the important online learning issues they present in the book and more. In this first installment, we speak with Alma Boutin-Martinez, Ph.D., Fielding’s Associate Provost for Teaching, Learning, and Assessment. She co-wrote the chapter “Evaluation and Assessment Models in Online Learning” with J. Joseph Hoey, Ed.D. and Lorna Gonzalez, Ph.D.

This interview has been edited lightly for lengthy and clarity.

A good place to start is with the basics. Help us understand two key concepts of online learning, assessment and evaluation, which you and your co-authors discuss in Chapter 16 of the “Handbook of Online Learning.”

Yes, that is a good starting place. In our chapter, we incorporated Banta and Palomba’s definition. They are two leading authorities on assessment and define it as “the process of providing credible evidence of resources, implementation actions, and outcomes underlying a purpose for improving the effectiveness of instruction, programs, or services in higher education.”

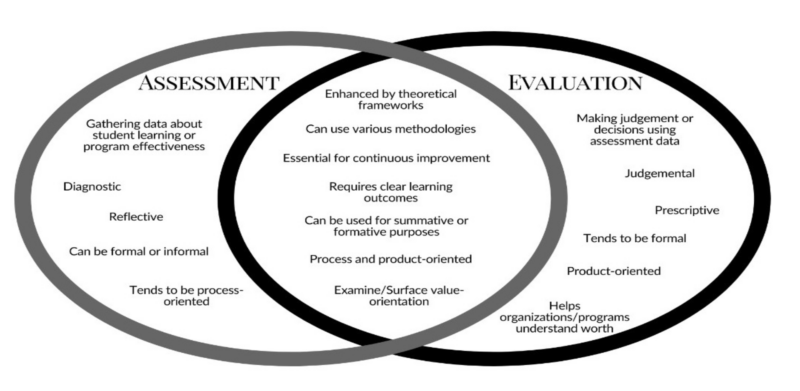

In contrast, “evaluation applies judgment to data that’s gathered and interpreted through assessment,” according to Banta and Palomba. In the “Handbook of Online Learning,” my co-authors and I developed a Venn diagram that shows the similarities and dissimilarities between assessment and evaluation.

Note: Used by permission of the authors.

Hoey, J., Boutin-Martinez, A., & Gonzales, L. (2021). Assessment and Evaluation of Online Learning. Handbook of Online Learning in Higher Education, Rudestam, K. E., Schoenholtz-Read, J. & Snowden, M. L. (Eds.), Fielding University Press.

Your chapter introduces assessment from the perspective of diversity, equity, inclusion, and accessibility or DEIA. Can you talk a bit about how you see online learning through a DEIA lens and the role of assessment and evaluation?

The COVID-19 pandemic has significantly impacted teaching and learning in higher education and K-12 systems and forced massive shifts from classroom to online course delivery. At the same time, important issues like racial and economic disparities, access, and mental health challenges have surfaced, and are impacting the delivery of online learning and student success. So, as those in education grapple with this shift, the use of effective assessment and evaluation practices and models is more important than ever.

In the chapter, we provide an overview of the CIPP Evaluation Model, Outcomes Assessment Cycle, and Peralta Online Equity Rubric. They can be used to support continuous improvement and to measure how programs are doing. At the same time, assessment and evaluation should also focus on ways to ensure students’ equity, and as a result, remove barriers. Ultimately, the goal is to support the success of all students.

So much of what educators and universities are learning about online learning and implementing has rapidly evolved as the pandemic has disrupted classroom instruction. In the process, have assessment and evaluation in online learning in higher education become an afterthought? What have you noted, pre-pandemic and now?

Great question. Well, before the pandemic, the Peralta Online Equity Rubric was created to provide a structured form of support for learning environments to ensure that they are inviting, inclusive, and meaningful for all learners. The rubric is grounded on research and focuses on aspects of online courses that can negatively impact student learning success.

Some of the domain areas include accessibility and meaning, such as linking resources and topics to various backgrounds, identities, and cultures. There’s a value for diversity and inclusion. One example of how it can be used is in the creation of a diversity and inclusion statement in a course syllabus. This year, Fielding added a Commitment to Diversity section to its syllabus templates. Peer-to-peer engagement, student support, and universal design for learning are other criteria found in the Peralta Rubric.

Since about 2019, connection and belonging have become another addition to this Peralta Rubric that I think is absolutely critical, especially during the pandemic. I say that because people were isolated and not able to leave their homes. There is interesting research on student motivation, how to create an inviting environment, and deepen connections between students. Aspects of the Peralta Rubric, such as ways to promote connection and belonging, can also be used by instructors who teach in-person courses.

What I also see trending is the Community of Inquiry Survey, which is another great assessment tool. It was developed to examine learners’ perception of the learning experience across cognitive, social, and teaching domains.

Using this assessment tool would be great to get a sense of the environment. During the pandemic, it’s even more critical for us to be aware of these different domains, obtain feedback from students, and reflect on their learning experiences to support continuous improvement. This could include, for instance, students’ engagement in the course, their interaction with content, and course design and facilitation.

Can you talk about significant changes and trends in online learning and the impact on assessment and evaluation? No doubt, the pandemic has been a driver.

Key, I think, is the need to be explicit about course expectations, tasks, learning outcomes, and how to provide criteria for success. While this focus was popular before the pandemic, it has become more so, colleagues have shared. The Transparency Framework was developed to promote the success of all learners. It is a useful framework because it highlights the need to be explicit and transparent to learners about what they’re being asked to do in the course. For example, how the course work will help learners develop their skills and what criteria will be used to evaluate their assignments.

So, this tool helps make sure expectations about coursework are clear and that learners understand how their papers and assignment will be evaluated. It will also help them see a connection to learning outcomes that can support their development.

Learn more about the “Evaluation and Assessment Models in Online Learning” chapter and hear from its three authors https://youtu.be/yVCcsg1jY90.

Join Over 7,500 Fielding Alumni Located Around The World!

Change the world. Start with yours.™

Get Social